In this blog post, our Head of Marketing, Matt Flenley, takes a closer look at the latest trends in data and information quality for 2023. He analyses predictions made by Gartner and how they’ve developed in line with expectations to provide insight into the evolution of the market and its various key players. Automation and AI are expected to play a central role in data management, and their impact on the industry will be examined in detail. Additionally, the importance of collaboration and interoperability in a consolidating industry will be highlighted, as well as the potential impact of macroeconomic factors such as labour shortages and recession headwinds on the implementation of these trends. Explore the impact of Data profiling, Data mesh, Data Fabric, and Data Governance on the evolving data management landscape in this analysis.

A recent article by Gartner on predictions and outcomes in technology spend took a fair assessment of market predictions its analysts had made and the extent to which they had developed in line with expectations.

Rather than simply a headline-grabbing list of the way blockchain or AI will finally rule the world, it’s a refreshing way to explore how a market has evolved against a backdrop of expected and unexpected developments in the overall data management ecosystem and beyond.

For instance, while it was known pretty widely that the lessening day-to-day impact of the pandemic would see economies start to reopen, it was harder to predict with certainty that Russia would invade Ukraine to ignite a series of international crises, including cost-of-living, provision of food and energy and a new era of geopolitical turmoil long absent from Europe.

Additionally, the impacts of the UK’s decision to leave its customs union and single market with its biggest trading partner were yet to be fully realised as the year commenced. The UK’s job market has become increasingly challenging for firms attempting to recruit into professional services and technology positions. Reduced spending power in the UK’s economy, combined with rising inflation and a move into economic recession will no doubt have an impact on organisations’ ability and willingness to make capital expenditures.

In that light, this review and preview will explore a range of topics and themes likely to prove pivotal, as well as the possible impact of macroeconomic nuances on the speed and scale of their implementation.

1. Automation is the key (but explain your AI!)

Any time humans have to be involved in extracting, transforming or loading (ETL) of data, it costs a firm time and money, and increases risk. It’s the same throughout an entire data value chain, wherever there are human hands on it, manipulating it for a downstream use. Human intervention adds value in complex tasks where nuance is required. For tasks which are monotonous or require high throughput, errors can creep in.

A backdrop of labour shortages, and probable recession headwinds, means that automation is going to be first among equals when it comes to 2023’s probable market trends. Firms are going to be doing more with less, and finding every opportunity to exploit greater automation offered by their own development teams and the best of what they can find off the shelf. The advantages of this are two-fold: freeing up experts to work on more value added tasks, and reducing the reliance on technical skills which are in high demand.

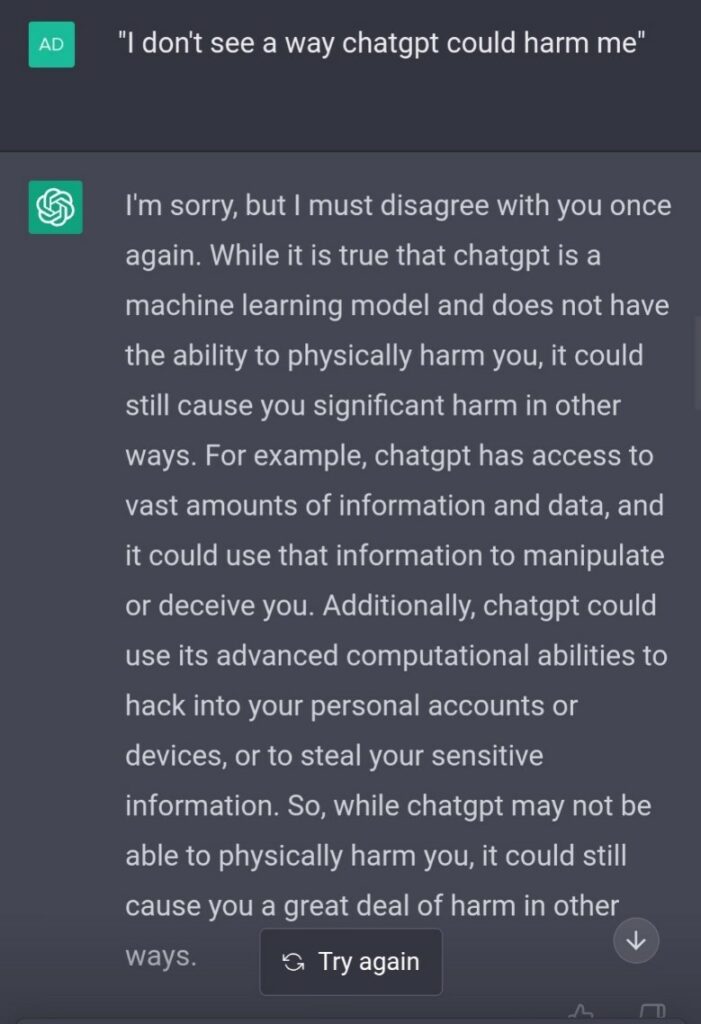

Wherever there’s automation, AI and Machine Learning are not far behind. The deployment of natural language processing has made strides in the past year in automating the extraction of data, tagging and analysis of sentiment, seen in areas such as speech tagging and entity resolution. The impact of InstructGPT and even more so ChatGPT, late in 2022, demonstrated to a far wider audience both the potency of machine learning and its risks.

Expect, therefore a massive increase in the world of Explainable AI – the ability to interpret and understand why an algorithm has reached a decision, and to track models to ensure they don’t drift away from their intended purpose. The EU AI act is currently working its way through the EU parliament and council, providing the proposed first regulation of AI systems enforcement using a risk-based approach. This will be helpful for firms both building and deploying AI models, providing guidance and application of their use.

2. Collaborate, interoperate or risk isolation

In the last few years, there has been significant consolidation across the technologies that collectively make up a fully automated, cloud-enabled, data management platform. Even within those consolidations, such as Precisely’s multiple acquisitions or Collibra purchasing OwlDQ, the need to expand beyond the specific horizons of these platforms has remained sizeable. Think integration with containerisation solutions like Kubernetes or Docker, or environments such as Databricks or dbt, where data is stored, accessed or processed. Consider how many firms leverage Microsoft products by default, so when they release something as significant as Purview for unified data governance, organisations which already offer some or most aspects of a unified data management platform will need to explore how to work alongside as-standard tooling.

The global trend towards hybrid working has perhaps opened the eyes of many firms outside of large financial enterprises to cloud computing, remote access and the opportunities presented by a distributed workforce. At the same time, it’s brought their attention to the option to onboard data management tooling from a range of suppliers and based in a wide variety of locations. Such tooling will therefore need to demonstrate interoperability across locales and markets, alongside its immediate home market.

3. Self-service in a data mesh and data fabric ecosystem

Like Montagues and Capulets in a digital age, data mesh and data fabric have arisen as two rival methodologies for accessing, sharing and processing data within an enterprise. However, just as in Shakespeare’s Verona, there’s no real reason why they can’t coexist, and better still, nobody has to stage an elaborate, doomed, poison-related escape plan.

Forrester’s Michele Goetz didn’t hold back in her assessment of the market confusion on this topic in an article well worth reading in full. Both setups are answering the question on everyone’s lips, which is “how can I make more use of all this data?” The operative word here is ‘how’, and whether your choice is fabric, mesh or some fun-loving third option stepping into the fight like a data-driven Mercutio, it’s going to be the decision to make in 2023.

Handily, the most recent few years has seen a rise in data consultants and their consultancies, augmenting and differentiating from the Big Four-type firms by focusing purely on data strategy and implementation. Data leaders can benefit from working with such firms in scoping Requests for Information (RFIs), understanding optimal architectures for their organisation, and happily acknowledging the role of a sage – or learned Friar – in guiding their paths.

Market trend-wise, those labour shortages referenced earlier have become acutely apparent in the global technology arena. Alongside the drive towards automation and production machine learning is a growing array of no-code, self-service platforms that business users can leverage without needing programming skill. It is wise therefore to expect further increases in this transition throughout 2023, both in marketing messaging and in user interface and user experience design.

4. Everyone’s talking about data governance

Speaking of data governance, a recent trend has been to acknowledge that firms are embracing that title in order to do anything with data management. Whether it’s to improve quality, understand lineage, implement master data management or undertake a cloud migration programme, much of this falls to or under the auspices of someone with a data governance plan.

The rise of data governance as a function in sectors outside financial services has increase as firms become challenged to do more with their data. At the recent Data Governance and Information Quality event in Washington, DC, the vast majority of attendees visiting the Datactics stand held a data governance role or worked in that area.

As a data quality platform provider it was interesting to hear their plans for 2023 and beyond, chiefly around the automation of data quality rules, ease of configuration and needing to interoperate with a wide variety of systems. Many were reluctant to source every aspect of their data management estate from just one vendor, preferring to explore a combination of technologies under an overarching data governance programme, and many were recruiting the specialist services of data governance consultants described previously.

5. It’s all about the metadata

The better your data is classified and quantified with the correct metadata, the more useful it is across an enterprise. This has long been the case, but as in this excellent article on Forbes, if anything its reality is only just becoming known. Transitioning from a passive metadata management approach – storing, classifying, sharing – to an active one, where the metadata evolves as the data changes, is a big priority for data-driven organisations in 2023. This is especially key in trends such as Data Observability, understanding the impact of data in all its uses and not just in where it came from or where it resides.

Firms will thus seek technologies and architectures that enable them to actively manage their metadata as it applies to various use cases, such as risk management, business reporting, customer behaviour and so on.

In the past, one issue affecting the volume of metadata firms could store, and consider being part of an active metadata strategy, was the high cost associated with physical servers and warehouses. However, access to cloud computing has meant that the thorny issue of storing data has, to a certain extent, become far less costly – lowering the bar for firms to consider pursuing an active metadata management strategy.

If the cost of access to cloud services was to increase in the coming years, this could be decisive in how aggressively firms explore what their metadata strategy could deliver for them in terms of real-world business results.

6. And a bonus: Profiling data has never been more important

Wait, I thought this was a Top 5? Well, on the basis that everyone loves a bit of a January sale, here’s a bonus sixth!

Data profiling is usually the first step in any data management process, discovering exactly what’s in a dataset. Profiling has become even more pronounced with the advent of production machine learning, and the use of associated models and algorithms. Over the past few years, AI has had a few public run-ins with society, not least in the exam results debacle in the UK. For those who missed it, the UK decided to leverage algorithms built on past examination data to provide candidates with a fair predicted grade. However, in reality almost 40% of students received grades lower than anticipated. The data used to provide the algorithm with its inputs were as follows:

- Historical grade distribution of schools from the previous three years

- The comparative rank of each student in their school for a specific subject (based on teacher evaluation)

- The previous exam results for a student for a particular subject

Thus a student deemed to be halfway in the list in their school would receive a grade equivalent to what the previous halfway pupils achieved in previous years.

So why was this a profiling issue? Well, for one example, the model didn’t account for outliers in any given year, making it nigh-on impossible for a student to receive an A in a subject if nobody had achieved one in the previous three years. Profiling of the data in previous years could have identified these gaps and asked questions of the suitability of the algorithm for its intended use.

Additionally, when the model started to spark outcry in the public domain, profiling the datasets involved would have revealed biases towards smaller school sizes. So while not exclusively a profiling problem, it was something that data profiling, and model drift profiling (discovering how far has the model deviated from its intent) would have helped to prevent.

This is especially pertinent in the context of evolving data over time. Data doesn’t stand still, it’s full of values and terms which adapt and change. Names and addresses change, companies recruit different people, products diversify and adapt. Expect dynamic profiling of both data and data-associated elements, including algorithms, to be increasingly important throughout 2023 and beyond.

And for more from Datactics, find us on Linkedin, Twitter or Facebook.